Edge Computing: Decentralizing Data Processing to Enhance Latency and Throughput

Author: Андрей Макаров

In an increasingly connected world, the demand for faster and more efficient data processing is growing rapidly. Traditional cloud computing, where data is sent to centralized data centers for processing, is beginning to show its limitations, particularly in terms of latency and throughput. This is where edge computing comes into play, offering a solution by decentralizing data processing and bringing it closer to the source. By doing so, edge computing significantly improves both latency and throughput, making it a vital component in the future of digital infrastructure.

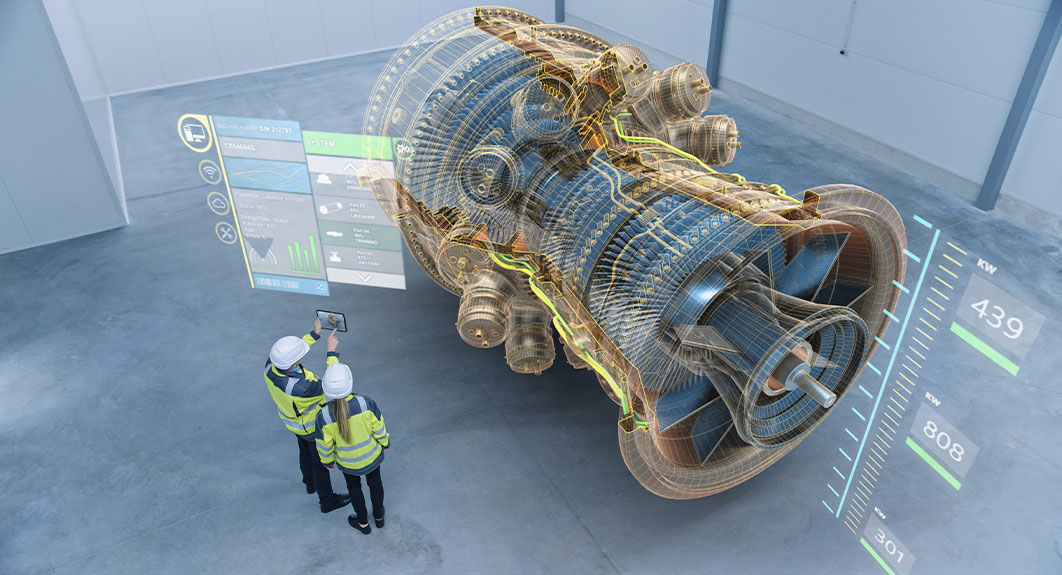

Edge computing refers to the practice of processing data near the edge of the network, close to where the data is generated, rather than relying on distant cloud servers. This shift in processing location drastically reduces the time it takes for data to travel back and forth, thereby lowering latency. For applications that require real-time responses, such as autonomous vehicles, smart cities, or industrial automation, reduced latency is crucial. With edge computing, decisions can be made almost instantaneously, allowing these systems to function more effectively and safely.

Another key advantage of edge computing is its ability to improve throughput. As more devices become connected and the volume of data being generated increases, centralized data centers can struggle to keep up, leading to bottlenecks and slower processing times. Edge computing addresses this issue by distributing the processing load across multiple edge locations. This decentralized approach allows for a higher volume of data to be processed simultaneously, improving overall system efficiency and enabling more devices to be connected without compromising performance.

Edge computing is also particularly beneficial in environments where bandwidth is limited or expensive. In traditional models, large amounts of data need to be sent to and from centralized servers, consuming significant bandwidth and potentially leading to network congestion. By processing data locally, edge computing minimizes the need for constant data transmission, reducing bandwidth usage and associated costs. This makes it an attractive solution for industries like telecommunications, manufacturing, and healthcare, where efficient data management is essential.

Security and privacy are additional benefits of edge computing. With data being processed closer to its source, there is less need to transmit sensitive information over long distances, where it might be more vulnerable to interception or breaches. Localized processing allows businesses to implement robust security measures directly at the edge, protecting data more effectively and ensuring compliance with privacy regulations. This is especially important in sectors that handle large amounts of personal or sensitive information.

The potential applications of edge computing are vast and varied. In healthcare, for instance, wearable devices can use edge computing to monitor patient vitals in real-time, enabling immediate analysis and response without the delay of sending data to a distant server. In smart cities, edge computing allows for real-time traffic management, public safety monitoring, and efficient use of resources. The industrial sector benefits from edge computing through predictive maintenance, where machines can be monitored and analyzed in real-time to prevent breakdowns and optimize performance.

In conclusion, edge computing represents a significant shift in the way data is processed, offering solutions to the challenges of latency, throughput, and security. By decentralizing data processing and bringing it closer to the source, edge computing is set to play a crucial role in the development of faster, more efficient, and more secure digital systems. As technology continues to evolve and the demand for real-time data processing grows, edge computing will be at the forefront of enabling the next generation of connected devices and applications.

Popular Articles